Introduction

How do you know if you can run terraform apply to your infrastructure without negatively affecting critical business applications? You can run terraform validate and terraform plan to check your configuration, but will that be enough? Whether you’ve updated some HashiCorp Terraform configuration or a new version of a module, you want to catch errors quickly before you apply any changes to production infrastructure. In this post, I’ll discuss some testing strategies for HashiCorp Terraform configuration and modules so that you can terraform apply with greater confidence. As a HashiCorp Developer Advocate, I’ve compiled some advice to help Terraform users learn how infrastructure tests fit into their organization’s development practices, the differences in testing modules versus configuration, and approaches to manage the cost of testing. I included a few testing examples with Terraform’s native testing framework. No matter which tool you use, you can generalize the approaches outlined in this post to your overall infrastructure testing strategy. In addition to the testing tools and approaches in this post, you can find other perspectives and examples in the references at the end. Rephrase The Content

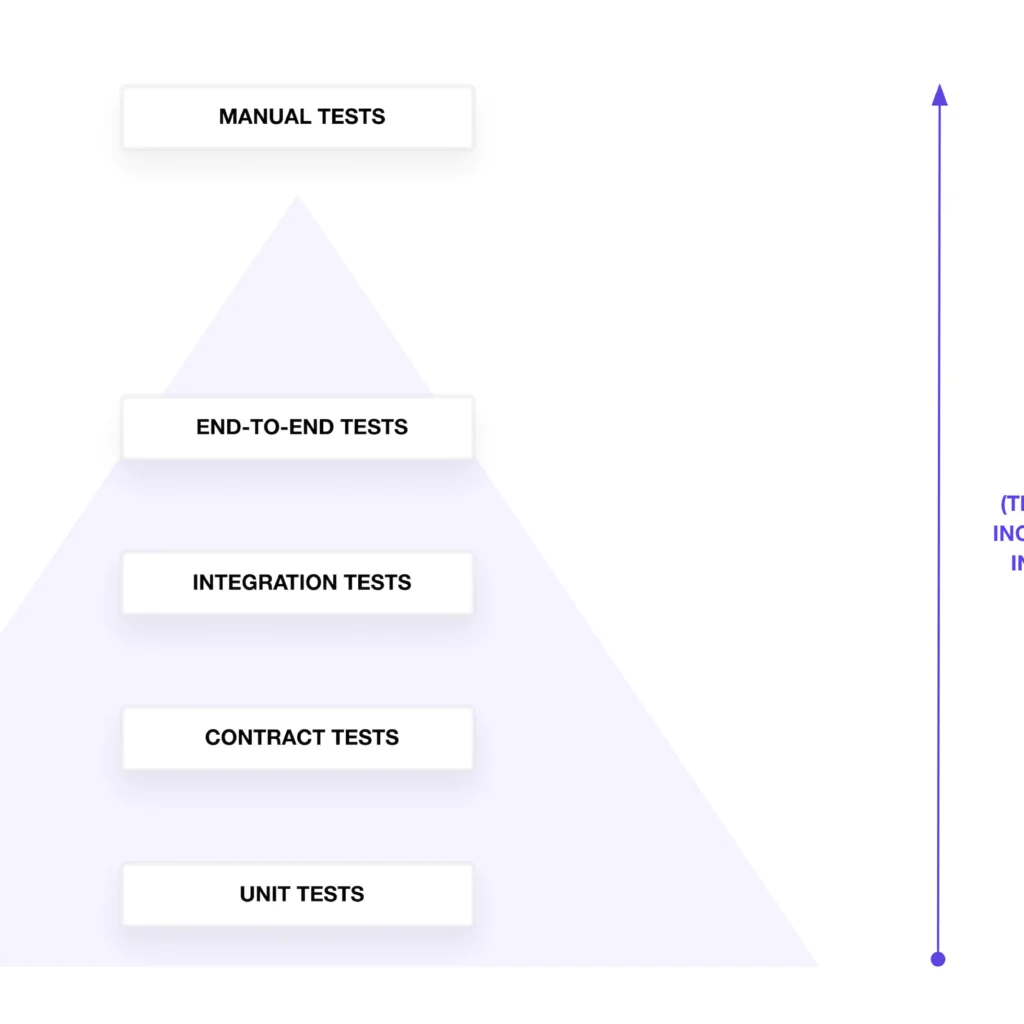

The testing pyramid

Theoretically, one might opt to synchronize their infrastructure testing strategy with the test pyramid model, which organizes tests based on type, scope, and granularity. According to the test pyramid concept, fewer tests are written for categories at the top of the pyramid, while more tests are created for categories at the bottom. Tests at the higher levels of the pyramid require more time and incur higher costs due to the increased configuration and creation of resources.

In practice, your tests may not precisely adhere to the pyramid structure. While the pyramid provides a standard framework to define the scope of tests for validating configuration and infrastructure resources, real-world scenarios often deviate. Beginning at the pyramid’s base, I’ll commence with unit tests and progress upwards, culminating in end-to-end tests. Manual testing, which entails checking infrastructure functionality on a case-by-case basis, may demand significant time and effort, contributing to higher costs.

Linting and formatting

Even though they don’t fit neatly into the test pyramid, tests ensuring the integrity of your Terraform configuration are crucial. Utilize commands like terraform fmt -check and terraform validate to both format and validate the correctness of your Terraform configuration.

In collaborative Terraform projects, it’s beneficial to assess configurations against established standards and best practices. Consider employing or building a linting tool to scrutinize your Terraform configuration for adherence to specific guidelines and patterns. For instance, a linter can confirm that team members define a Terraform variable for an instance type rather than hard-coding the value.

Unit tests

At the base of the pyramid, unit tests play a crucial role in verifying individual resources and configurations for their expected values. They aim to answer the fundamental question: “Does my configuration or plan contain the correct metadata?” Typically, unit tests should be designed to run independently, free from external dependencies or API calls.

To enhance test coverage, any programming language or testing tool can be employed to parse the Terraform configuration in HashiCorp Configuration Language (HCL) or JSON. This helps check for statically defined parameters, such as provider attributes with defaults or hard-coded values. However, these tests may not cover aspects like correct variable interpolation or list iteration, prompting the need for additional unit tests that parse the plan representation rather than the Terraform configuration.

Configuration parsing itself doesn’t necessitate active infrastructure resources or authentication to an infrastructure provider. On the other hand, unit tests against a Terraform plan require authentication to the infrastructure provider, involving comparisons that overlap with security testing through policy as code. This involves checking attributes in the Terraform configuration for correctness.

As an example, consider a Terraform module that extracts the IP address from an AWS instance’s DNS name, generating a list of IP addresses saved to a local file. Without closer inspection, it’s unclear whether the module accurately replaces hyphens and retrieves the IP address information.

variable "services" {

type = map(object({

node = string

kind = string

}))

description = "List of services and their metadata"

}

variable "service_kind" {

type = string

description = "Service kind to search"

}

locals {

ip_addresses = toset([

for service, service_data in var.services :

replace(replace(split(".", service_data.node)[0], "ip-", ""), "-", ".") if service_data.kind == var.service_kind

])

}

resource "local_file" "ip_addresses" {

content = jsonencode(local.ip_addresses)

filename = "./${var.service_kind}.hcl"

}

To manually verify that your module accurately retrieves only TCP services and outputs their IP addresses, you might pass an example set of services and run terraform plan. However, relying solely on manual checks becomes impractical as the module evolves, risking the potential breakage of its ability to retrieve the correct services and IP addresses. Writing unit tests becomes essential to ensure that the logic, which involves searching for services based on kind and retrieving their IP addresses, remains functional throughout the module’s lifecycle.

In the provided example, two sets of unit tests, written in Terraform test, validate the logic responsible for generating IP addresses for each service kind. The first set of tests confirms that the file contents will contain two IP addresses for TCP services, while the second set checks that the file contents will have one IP address for the HTTP service:

variables {

services = {

"service_0" = {

kind = "tcp"

node = "ip-10-0-0-0"

},

"service_1" = {

kind = "http"

node = "ip-10-0-0-1"

},

"service_2" = {

kind = "tcp"

node = "ip-10-0-0-2"

},

}

}

run "get_tcp_services" {

variables {

service_kind = "tcp"

}

command = plan

assert {

condition = jsondecode(local_file.ip_addresses.content) == ["10.0.0.0", "10.0.0.2"]

error_message = "Parsed `tcp` services should return 2 IP addresses, 10.0.0.0 and 10.0.0.2"

}

assert {

condition = local_file.ip_addresses.filename == "./tcp.hcl"

error_message = "Filename should include service kind `tcp`"

}

}

run "get_http_services" {

variables {

service_kind = "http"

}

command = plan

assert {

condition = jsondecode(local_file.ip_addresses.content) == ["10.0.0.1"]

error_message = "Parsed `http` services should return 1 IP address, 10.0.0.1"

}

assert {

condition = local_file.ip_addresses.filename == "./http.hcl"

error_message = "Filename should include service kind `http`"

}

}

I have set mock values for a predefined set of services within the “services” variable. The tests incorporate the command plan to inspect attributes in the Terraform plan without executing any changes. Consequently, the unit tests do not generate the local file defined in the module.

The provided example illustrates positive testing, where I validate that the input behaves as expected. Terraform’s testing framework also accommodates negative testing, where an error is anticipated for incorrect input. The expect_failures attribute can be employed to capture such errors.

If you prefer not to use Terraform’s native testing framework, alternatives include utilizing HashiCorp Sentinel, a programming language, or a configuration testing tool of your choice. These tools can parse the plan representation in JSON and verify the correctness of your Terraform logic.

In addition to testing attributes in the Terraform plan, unit tests can validate:

- The number of resources or attributes generated by

for_eachorcount - Values generated by

forexpressions - Values produced by built-in functions

- Dependencies between modules

- Values associated with interpolated values

- Expected variables or outputs marked as sensitive

For simulating a terraform apply without creating resources, unit tests can use mocks. Some cloud service providers offer community tools that mock their service APIs. It’s essential to note that not all mocks accurately replicate the behavior and configuration of their target API.

Overall, unit tests execute rapidly, providing swift feedback. As an author of a Terraform module or configuration, you can leverage unit tests to communicate expected values to other team members. Since unit tests operate independently of infrastructure resources, they incur virtually zero cost, making frequent runs practical.

Contract tests

Ascending one level from the base of the pyramid, contract tests come into play to verify that a configuration utilizing a Terraform module adheres to properly formatted inputs. The primary question addressed by contract tests is, “Does the expected input to the module align with what I intend to pass to it?”

Contract tests play a crucial role in ensuring that the agreement between a Terraform configuration’s expected inputs to a module and the actual inputs of the module remains intact. In Terraform, most contract testing is designed to benefit the module consumer by clearly communicating how the module author anticipates its usage. To enforce specific usage patterns, authors can employ a combination of input variable validations, preconditions, and postconditions to verify the combination of inputs and highlight any errors.

As an illustration, one might use a custom input variable validation rule to ensure that an AWS load balancer’s listener rule receives a valid integer range for its priority

variable "listener_rule_priority" {

type = number

default = 1

description = "Priority of listener rule between 1 to 50000"

validation {

condition = var.listener_rule_priority > 0 && var.listener_rule_priority < 50000

error_message = "The priority of listener_rule must be between 1 to 50000."

}

}

As a part of input validation, you can use Terraform’s rich language syntax to validate variables with an object structure to enforce that the module receives the correct fields. This module example uses a map to represent a service object and its expected attributes:

variable "services" {

type = map(object({

node = string

kind = string

}))

description = "List of services and their metadata"

}

In addition to custom validation rules, you can use preconditions and postconditions to verify specific resource attributes defined by the module consumer. For example, you cannot use a validation rule to check if the address blocks overlap. Instead, use a precondition to verify that your IP addresses do not overlap with networks in HashiCorp Cloud Platform (HCP) and your AWS account:

resource "hcp_hvn" "main" {

hvn_id = var.name

cloud_provider = "aws"

region = local.hcp_region

cidr_block = var.hcp_cidr_block

lifecycle {

precondition {

condition = var.hcp_cidr_block != var.vpc_cidr_block

error_message = "HCP HVN must not overlap with VPC CIDR block"

}

}

}

Contract tests play a vital role in identifying misconfigurations within modules before applying changes to live infrastructure resources. They serve as a means to validate correct identifier formats, adherence to naming standards, attribute types (such as private or public networks), and compliance with value constraints, including character limits or password requirements.

If custom conditions in Terraform are not preferable, alternatives like HashiCorp Sentinel, a programming language, or a chosen configuration testing tool can be employed. These contract tests should be stored in the module repository and incorporated into each Terraform configuration utilizing the module through a continuous integration (CI) framework. When a change is pushed to version control referencing the module, the contract tests automatically run against the plan representation before applying any changes.

While building unit and contract tests may require additional time and effort, they provide a valuable mechanism to catch configuration errors preemptively, before executing terraform apply. In scenarios involving larger and more intricate configurations with numerous resources, manually checking individual parameters becomes impractical. Instead, leveraging unit and contract tests allows for the efficient automation of critical configuration verifications, establishing a robust foundation for collaboration across teams and organizations. These lower-level tests effectively communicate system knowledge and expectations to teams responsible for maintaining and updating Terraform configurations.

Integration tests

As we ascend to the top half of the pyramid, we encounter tests that necessitate active infrastructure resources for proper execution. Integration tests come into play to verify that a configuration utilizing a Terraform module effectively processes properly formatted inputs. The fundamental question these tests address is, “Does this module or configuration successfully create the resources?”

While a terraform apply provides a form of limited integration testing by creating and configuring resources while managing dependencies, it is advisable to augment this with additional tests that specifically validate configuration parameters on the active resources.

In the provided example, I introduce a new terraform test to execute the configuration, creating the specified file. Subsequently, I verify the existence of the file on my filesystem. This integration test not only utilizes terraform apply to create the file but also ensures its removal by executing a terraform destroy. This comprehensive approach aims to validate not only the successful creation but also the correct handling and removal of resources during the testing process

run "check_file" {

variables {

service_kind = "tcp"

}

command = apply

assert {

condition = fileexists("${var.service_kind}.hcl")

error_message = "File `${var.service_kind}.hcl` does not exist"

}

}

Verifying every parameter that Terraform configures on a resource is a possibility, but it might not be the most efficient use of time and effort. Terraform providers already include acceptance tests that ensure resources are properly created, updated, and deleted with the correct configuration values. Instead, focus integration tests on validating that Terraform outputs provide accurate values or the expected number of resources. These tests are particularly useful for scenarios that can only be verified after a terraform apply, such as handling invalid configurations, nonconformant passwords, or outcomes of for_each iteration.

When selecting an integration testing framework external to terraform test, consider factors like existing integrations and the preferred languages within your organization. Integration tests play a crucial role in determining whether to update your module version and ensure that the tests run without errors.

Given the necessity to set up and tear down resources, it’s not uncommon for integration tests to take 15 minutes or more to complete, depending on the nature of the resource. Consequently, it is advisable to implement as much unit and contract testing as possible. This approach allows for swift identification of incorrect configurations, minimizing the wait time associated with resource creation and deletion.

End-to-end tests

Once you’ve applied Terraform changes to your production environment, it becomes crucial to determine whether end-user functionality has been impacted. End-to-end tests address the fundamental question: “Can someone use the infrastructure system successfully?”

For instance, in the context of upgrading the version of HashiCorp Vault, end-to-end tests can validate that application developers and operators can still retrieve a secret from Vault after the upgrade. These tests serve as a safeguard to ensure that changes do not break the expected functionality. As part of checking the proper upgrade of Vault, an end-to-end test could involve creating an example secret, retrieving it, and subsequently deleting it from the cluster.

Typically, I write an end-to-end test using a Terraform check to confirm that any updates made to a HashiCorp Cloud Platform (HCP) Vault cluster result in a healthy, unsealed status. This comprehensive testing approach helps ascertain the overall health and functionality of the infrastructure system in a real-world scenario.

check "hcp_vault_status" {

data "http" "vault_health" {

url = "${hcp_vault_cluster.main.vault_public_endpoint_url}/v1/sys/health"

}

assert {

condition = data.http.vault_health.status_code == 200 || data.http.vault_health.status_code == 473

error_message = "${data.http.vault_health.url} returned an unhealthy status code"

}

}

In addition to utilizing a check block, end-to-end tests can be written in any programming language or testing framework. This typically involves making an API call to check an endpoint after creating infrastructure. End-to-end tests typically rely on the entire system, encompassing networks, compute clusters, load balancers, and more. Consequently, these tests are usually conducted against long-lived development or production environments.

This approach ensures that the tests provide a comprehensive evaluation of the system’s functionality and integration, reflecting real-world scenarios with the complete set of components in place.

Testing Terraform modules

When you test Terraform modules, you want enough verification to ensure a new, stable release of the module for use across your organization. To ensure sufficient test coverage, write unit, contract, and integration tests for modules.

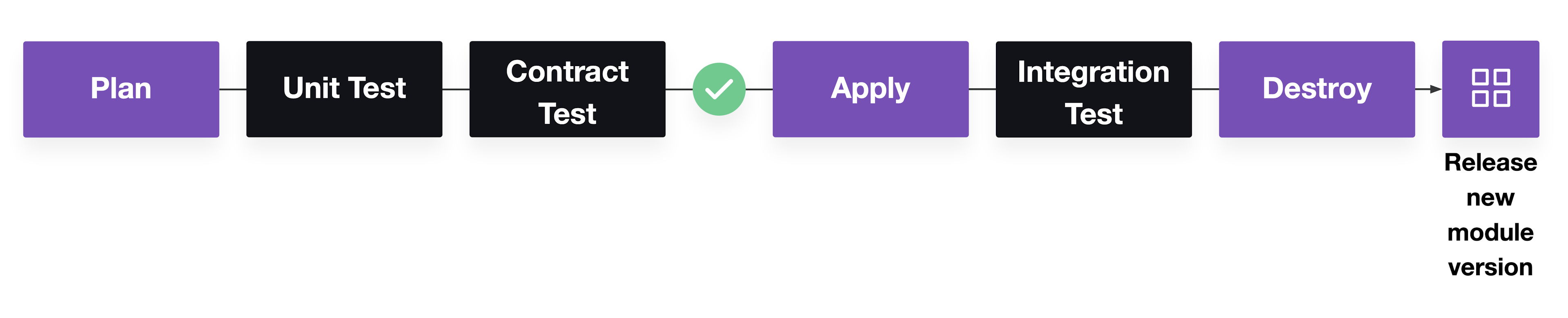

A module delivery pipeline starts with a terraform plan and then runs unit tests (and if applicable, contract tests) to verify the expected Terraform resources and configurations. Then, run terraform apply and the integration tests to check that the module can still run without errors. After running integration tests, destroy the resources and release a new module version.

Pipeline for Terraform module testing

The Terraform Cloud private registry provides a branch-based publishing workflow with automated testing capabilities. When utilizing terraform test for your modules, the private registry automatically executes these tests before releasing a module.

While testing modules, it’s important to consider the cost and coverage of module tests. To manage costs and ensure independent tracking, conduct module tests in a different project or account. This practice helps prevent module resources from unintentionally overwriting environments. Integration tests, due to their high financial and time costs, can sometimes be omitted. Tasks like spinning up databases and clusters may take half an hour or more, and frequent changes might result in multiple test instances.

To control costs, it’s advisable to run integration tests after merging feature branches and select the minimum number of resources needed for testing the module. If possible, avoid creating entire systems during these tests. Module testing is particularly applicable to immutable resources given their create and delete sequence. However, these tests may not accurately represent the end state of brownfield (existing) resources, as they don’t test updates. Consequently, while module testing instills confidence in successful module usage, it may not guarantee seamless application of module updates to live infrastructure environments

Testing Terraform configuration

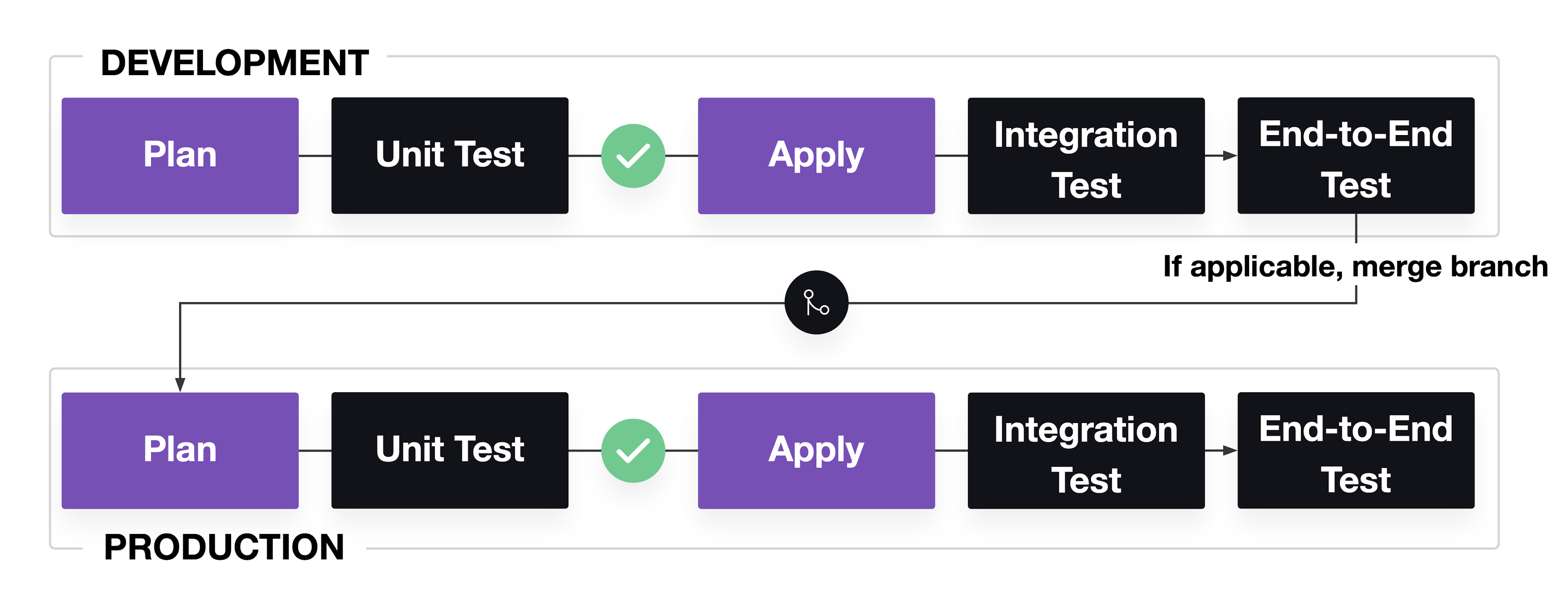

Compared to modules, Terraform configuration applied to environments should include end-to-end tests to check for end-user functionality of infrastructure resources. Write unit, integration, and end-to-end tests for configuration of active environments.

The unit tests do not need to cover the configuration in modules. Instead, focus on unit testing any configuration not associated with modules. Integration tests can check that changes successfully run in a long-lived development environment, and end-to-end tests verify the environment’s initial functionality.

If you use feature branching, merge your changes and apply them to a production environment. In production, run end-to-end tests against the system to confirm system availability.

Pipeline for Terraform configuration testing

Failed changes to active environments can have significant repercussions on critical business systems. Ideally, a long-running development environment that faithfully replicates production provides a valuable means to identify potential problems early on. However, practical considerations, such as cost constraints and the challenge of emulating user traffic, often mean that development environments are not exact replicas of production. Consequently, a scaled-down version of production is commonly used to minimize costs.

The discrepancies between development and production environments can influence test outcomes. It’s crucial to be mindful of which tests are more critical for identifying errors or may be disruptive to run. While configuration tests in development may lack the precision of their counterparts in production, they still play a vital role in catching a range of errors. Moreover, they provide an opportunity to practice applying and rolling back changes before they are introduced to the production environment. This approach helps mitigate risks and enhances the overall reliability of the deployment process.

Conclusion

In conclusion, the selection of testing strategies for Terraform modules and configurations can vary based on your system’s cost and complexity. Whether opting to write tests in your preferred programming language or testing framework, or leveraging the built-in testing frameworks and constructs provided by Terraform, a range of options is available. These encompass unit, contract, integration, and end-to-end testing, allowing for a tailored approach that aligns with the specific requirements and characteristics of your infrastructure. The key is to choose a strategy or combination of strategies that best suit your project’s needs, ensuring the reliability and correctness of your Terraform deployments.

1 Comment

This article is a gem, offering a comprehensive yet concise overview of this topic. The clarity of expression and well-structured arguments make it an enjoyable and educational read. ❤️